This evaluation report is provided by user from VeriMake. The test of the board is to achieve image transmission and face recognition on MYIR’s MYD-YT507H development board through 50 lines of Python codes.

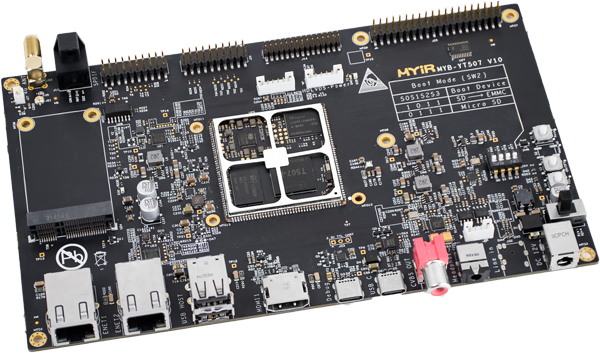

The MYD-YT507H Development Board is consists of a compact CPU Module MYC-YT507H and a base board to provide a complete evaluation platform for ALLWINNER T507-H Processor which among Allwinner T5 series with a 1.5GHz quad-core Cortex-A53 CPU and a Mali-G31 MP2 GPU.

Features:

- MYC-YT507H CPU Module as Controller Board

- 1.5GHz ALLWINNER T507-H Quad-core ARM Cortex-A53 MPU

- 1GB/2GB LPDDR4, 8GB eMMC Flash, 32Kbit EEPROM

- Serial ports, 2 x USB 2.0 Host, 1 x USB 2.0 OTG, TF Card Slot

- 1 x Gigabit Ethernet, 1 x 10/100Mbps Ethernet, 4G LTE Module Interface

- Supports Dual LVDS, HDMI and CVBS Display Output Interfaces

- Supports MIPI-CSI and DVP Camera Input

- Supports Running Linux and Android OS

- Optional LCD Modules, Camera Modules, WiFi/BT Module and RPI Module (RS232/RS485)

MYD-YT507H Development Board

The MYD-YT507H Development Board and the MYC-YT507 CPU Module based on AllWinner T507-H processor can be widely used in Internet of Things (IoT), automotive electronics, commercial display, industrial control, medical instruments, intelligent terminals and more other fields.

The board is capable of running Linux and Android OS. MYIR has provided abundant software resources as well as detailed documentations, which can effectively help developers improve development efficiency, shorten development cycle, and accelerate product development and market time.

Here we will use this development board to do a simple face recognition application based on image transmission. It is all developed in python, the actual code is not more than 50 lines and is relatively easy to get started. In this evaluation, we will try the wireless photo transmission function and superimpose face recognition to detect whether there is a face in the video. This test uses a USB camera, which can be directly connected to the development board interface.

Step 01 Connect with USB Camera

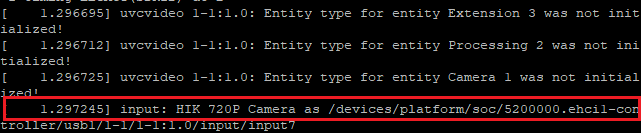

After connecting the camera, use the command dmesg, and see that the camera can be read. HIK 720p Camera is displayed.

Next, v4l is used to detect the camera's detailed parameters.

To install v4l: sudo apt install v4l-utils

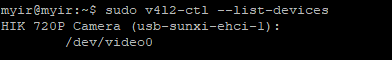

Run sudo v4l2-ctl --list-devices to view details and device numbers.

Step 02 Use OpenCV for Face Recognition

Before start, install a few libraries:

sudo apt update

sudo apt install python3-opencv

pip3 install --upgrade pip

pip3 install zmq

pip3 install pybase64

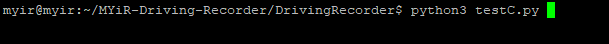

First, run the following program on the development board to read the camera data and send the data to the PC.

import cv2

import zmq

import base64

def main():

'''

Principal function

'''

IP = '192.168.2.240' # IP address of the video receiving terminal of the computer

# Create and set the video capture object

cap = cv2.VideoCapture(0)

print("open?{}".format(cap.isOpened())) cap.set(cv2.CAP_PROP_FRAME_WIDTH,320) # Set image width

cap.set(cv2.CAP_PROP_FRAME_HEIGHT,240) # Set image height

# Establish the TCP communication protocol

contest = zmq.Context()

footage_socket = contest.socket(zmq.PAIR)

footage_socket.connect('tcp://%s:5555'%IP)

The PC then receives information from the development board and stores and processes the contents of the video by cell frame. It's circled in red when it recognizes a face.

Open “anaconda prompt” and run the following:

import cv2

import zmq

import base64

import numpy as np

def main():

'''

Principal function

'''

context = zmq.Context()

footage_socket =

context.socket(zmq.PAIR)

footage_socket.bind('tcp://*:5555') cv2.namedWindow('Stream',flags=cv2.WINDOW_NORMAL | cv2.WINDOW_KEEPRATIO)

while True:

print("监听中") # Listening

frame = footage_socket.recv_string() # Receives a frame of video image data transmitted by TCP

img = base64.b64decode(frame) # The data is base64 decoded and stored in the memory img variable

npimg = np.frombuffer(img, dtype=np.uint8) # Decode this cache into a one-dimensional array

source = cv2.imdecode(npimg, 1) # Decode a one-dimensional array into an image source

# img=cv2.imread('1.png',1)

grayimg = cv2.cvtColor(source, cv2.COLOR_BGR2GRAY)

face_cascade = cv2.CascadeClassifier('haarcascade_frontalface_default.xml')

faces = face_cascade.detectMultiScale(grayimg, 1.2, 5)

for (x, y, w, h) in faces:

cv2.rectangle(source, (x, y), (x + w, y + h), (0, 0, 255), 2)

cv2.imshow('frame', source)

if cv2.waitKey(1) == ord('q'):

capture.release()

break

if __name__ == '__main__':

'''

Program entry

'''

main()

In normal operation, print is always listening.

At this time, you can see the camera picture in the window that pops up on the pc, and automatically recognize faces when they appear. But there is some delay.

|