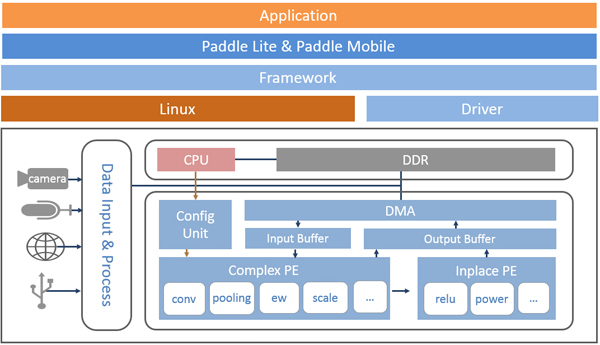

FZ3 Card is a deep learning accelerator card launched by MYIR while cooperating with Baidu. It is

based on Xilinx

Zynq UltraScale+ ZU3EG MPSoC which integrates 64-bit quad-core ARM Cortex-A53, GPU and FPGA, thus comes with

multi-core processing capability, FPGA programmable capability and hardware

decoding capability for video stream.

The FZ3 Card has built-in

deep learning soft core based on Linux OS and Baidu PaddlePaddle deep learning AI (Artificial Intelligence) framework which is fully compatible to use Baidu Brain’s

model resources and AI development tools like EasyDL, AI Studio and EasyEdge to enable developers and engineers to quickly train-deploy-reasoning models. Provided

with these hardware capabilities and software resources, the FZ3 Card reduces the threshold of development

validation, product integration, scientific research and teaching significantly.

How to build your applications with FZ3 Card and Baidu Brain PaddlePaddle? Below there are some guidances.

1. Obtaining models

Currently Paddle-Mobile only supports

Paddle trained models. If the models in your hands are different types of

models, you need to perform model conversion before running. Verified networks

include resnet, Inception, ssd, mobilenet, etc.

1.1 Training model

If you don't have a model, you can use the model in

sample or train the model by yourself.

1. Train model through Paddlepaddle open source

deep learning framework. Reference for

detailed use: PaddlePaddle

2. Through model

through AI studio platform training model, reference for detailed use: AI Studio

3. You may upload labeled data on EasyDL platform

to train the model. Reference for detailed

use: EasyDL

1.2 Converting model

1. If you already have a Caffe model, MYIR

provides a corresponding conversion tool to help you convert it to a Paddle

model. Reference

for detailed use: X2Paddle_caffe2fluid

2. If you already have a TensorFlow model,

MYIR provides a corresponding conversion tool to help you convert it to a

Paddle model. Reference

for detailed use: X2Paddle_tensorflow2fluid

2. Connecting video

data source

2.1 Video data input

via USB protocol video

You can choose the UVC

USB camera as the video source. Insert USB camera into USB interface of FZ3.

2.2 Video data input

via BT1120 protocol

You can select the

webcam with BT1120 video data output from Hisilicon. Connect the BT1120

interface of FZ3 through FPC cable. For the specific pin-description, please

refer to the hardware description.

2.3 Video data input

via Mipi protocol

You can choose a

suitable Mipi camera as the video source and connect to the Mipi interface of

FZ3 through FPC.

2.4 Video data input

via GigE protocol

You can choose the GIGE

camera that supports the Linux system, and contact our company to adapt the official

SDK of the camera. The hardware is connected to the FZ3 network port.

3. Load device

driver

Using FZ3's acceleration function,

the prediction library will calculate the op with a large amount of calculation

through the driver to call

FPGA to calculate. The driver needs to be loaded

before running your own application. The compiled driver is in the directory

/home/root/workspace/driver, providing two versions: the first version with no

log output and the second version with log output.

Load driver

insmod /home/root/workspace/driver/fpgadrv.ko

Uninstall driver (In normal circumstances, you don’t need to

uninstall the driver. If you need to load the version with log output, you can

uninstall it with the following command and then load this version)

rmmod /home/root/workspace/driver/fpgadrv.ko

Set the driver to be automatically loaded

1) Add a self-starting script to the system

// Open the startup directory

cd /etc/init.d/

// Create a new startup script and edit it, the name can

be customized

vim eb.sh

Script content

chmod +x /home/root/workspace/driver/fpgadrv.ko

insmod /home/root/workspace/driver/fpgadrv.ko

2) Establish soft links

cd /etc/rc5.d/

ln -s /etc/init.d/eb.sh S99eb

3) Change script permissions

chmod +x /etc/init.d/eb.sh

reboot

4. Using the prediction library

FZ3 supports Paddle-Moblie prediction library. The compiled

prediction library is in

/home/root/workspace/paddle-mobile.

How to

use it: Copy the header file and dynamic library of the prediction library to

your own application. You can also refer to the sample MYIR provides.

Paddle-Moblie

source code: https://github.com/PaddlePaddle/paddle-mobile

5. Create applications

5.1 Add prediction

library

Copy

the dynamic libraries and header files in /home/root/workspace/paddle-mobilie/

to your project. Add a reference to the Paddle-Mobile library in CmakeLists.txt

set(PADDLE_LIB_DIR "${PROJECT_SOURCE_DIR}/lib" )

set(PADDLE_INCLUDE_DIR

"${PROJECT_SOURCE_DIR}/include/paddle-mobile/" )

include_directories(${PADDLE_INCLUDE_DIR}) LINK_DIRECTORIES(${PADDLE_LIB_DIR})

...

target_link_libraries(${APP_NAME} paddle-mobile)

5.2 Add model

Copy

your trained model to your project.

5.3 Add prediction data sources

You can select pictures

and camera data as the source of prediction data. To use the camera, you need

to insert the corresponding camera.

5.3.1 USB camera

1) After plugging in the camera, check the

device access through ls /dev/video*. Display as below means pass:

config.dev_name = "/dev/video2";

/dev/video2 outputs YUV data for USB camera. When

the application prompts that the device cannot be found, you can modify

src/video_classify.cpp or src/video_detection.cpp. Check the camera

connectivity through the video tool in /home/root/workspace/tools.

// src/video_classify.cpp line 169

config.dev_name = "/dev/video2";

2) The camera resolution can be modified

// src/video_classify.cpp line 170

config.width = 1280;

config.height = 720;

3) Run the video tool

// Read the USB camera data, collect a picture and save it

to the local

cd /home/root/workspace/tools/video

./v4l2demo -i /dev/video2 -j -n 1

// If in doubt, check the help

./v4l2demo

-h

After executing the program, a .jpg file will be generated in the

directory build, you can check whether the picture is correct or not. If no

picture is generated, please check if the USB device is recognized.

5.3.2 BT1120 ipc camera

After FZ3 receives the original data through BT1120 protocol and

reasonig, it can transmit the result back to ipc through the serial port or spi

(For interface description of BT1120, serial port, spi please refer to the

hardware description). The frame number of the image can be carried in the

pixel data.

After inserting the camera, use the video tool in

/home/root/workspace/tools to check the camera connectivity.

1) View the device, the directory is /dev/video1 in

normal circumstances

ls /dev/video*

/dev/video0 /dev/video1

2) Set the camera parameters

media-ctl -v --set-format '"a0010000.v_tpg":0

[RBG24 1920x1080 field:none]'

3) Run the video tool

// Read BT1120 camera data, collect a picture and save to

local

cd /home/root/workspace/tools/video

./v4l2demo -i /dev/video1 -j -n 1

// If in doubt, check the help

./v4l2demo -h

After executing the program, a .jpg file will be generated in the

directory build, you can check if the picture is correct. If no picture is

generated, check whether the BT1120 cable is connected correctly.

5.4 Call the prediction

library to load the model and use the prediction data

5.4.1 Initialize the model

Predictor _predictor_handle = new Predictor();

_predictor_handle->init(model, {batchNum, channel,

input_height, input_width}, output_names);

5.4.2 Prepare data

1) Scale the picture to the specified size. If the

neural network requires a fixed size, the picture needs to be scaled to that

fixed size.

2) Image preprocessing (Minus mean value, floating

point conversion, normalization, etc.).

3) Output data. Because FZ3 uses NHWC format,

usually the data from the video is in NHWC format, so NHWC->NCHW conversion

is not required.

5.4.3 Predict data

bool predict(const float* inputs, vector

&outputs,vector&output_shapes);

Do you have

any idea to use MYIR’s FZ3 Card with Baidu Paddle to build your applications

now? Some typical applications are as below for reference.

Please get

more information about the FZ3

Card from MYIR’s website:

http://www.myirtech.com/list.asp?id=630

About

MYIR

MYIR Tech Limited is a global provider of ARM hardware and software

tools, design solutions for embedded applications. We support our customers in

a wide range of services to accelerate your pace from project to market.

We sell products ranging from board level products such as development

boards, single board computers and CPU modules to help with your evaluation,

prototype, and system integration or creating your own applications. MYIR also

provide our customers charging pile billing control units, charging control

boards and relative solutions inside of China. Our products are used widely in

industrial control, medical devices, consumer electronic, telecommunication

systems, Human Machine Interface (HMI) and more other embedded applications.

MYIR has an experienced team and provides custom services based on many

processors (especially ARM processors) to help customers make your idea a

reality.

More information about MYIR can be found at: www.myirtech.com

|